The shift towards competency-based education and what it means for assessing endoscopic skills

Objective assessment of cognitive and technical skills in gastrointestinal endoscopy is widely considered by clinical educators to be the gold standard for measuring a trainee’s competency. Such tools can serve to assess skill acquisition, meaningfully support promotion and credentialing decisions, and ensure quality assurance for clinical practice.

However, current assessment practices of technical skills remain subjective, unstructured, and susceptible to bias. Many Gastroenterology training programs rely on expert observation paired with global rating scales and procedure-specific checklists or quantitative surrogate measures of endoscopy skills, such as threshold numbers, to gauge a trainee’s procedural ability. These approaches require considerable expert supervision and time away from clinical duties, and do not take into account the variability in endoscopists’ styles and expectations. Threshold numbers and case volumes also poorly correlate with trainee competency.

More recently, the push towards quality metrics and competency-based education has prompted an effort to create evaluation tools that allow for objective, valid, and reliable assessment of clinical performance, with the goal of ensuring the necessary knowledge, skills, values, and attitudes of competent, independent physicians are achieved. This is spearheaded by the governing medical bodies such as the Royal College of Physicians and Surgeons in Canada, and Accreditation Council for Graduate Medical Education (ACGME) in the United States. For example, the 2014 rollout of the Next Accreditation System (NAS), a continuous assessment reporting system, centers around achievement of competency requirements and progressive milestones throughout training, and documentation of performance. Rather than assessment of a single procedure or total number of procedures without qualitative content at the end of training, periodic evaluation throughout a fellow’s education can help guide training goals and progression through learning curves.

Surgical simulators offer a unique approach to objective skills assessment

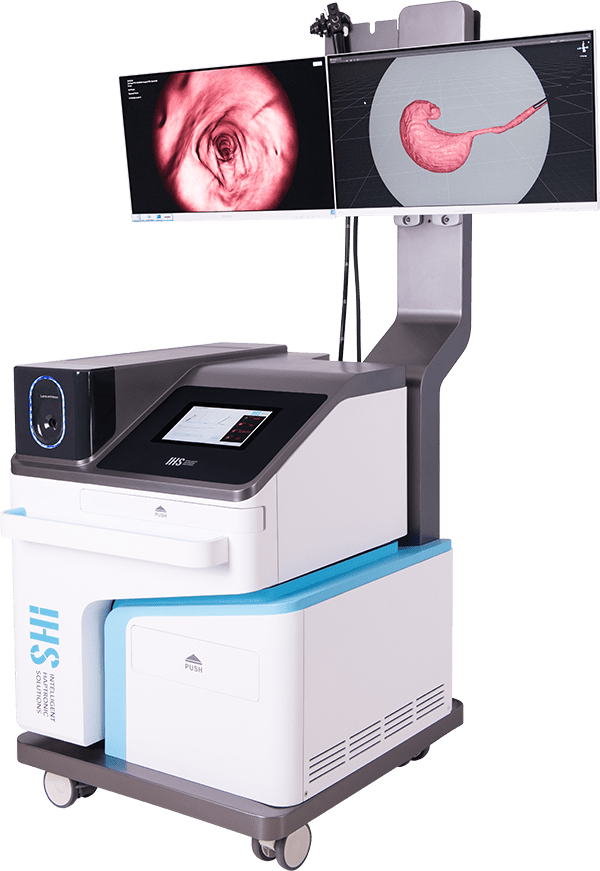

Virtual reality surgical simulators, which recreate the surgical experience in simulated anatomical models or environments, hold the potential for standardized, objective means of endoscopic skills measurement. Having already been implemented as a learning tool in early endoscopy training, validated and high fidelity surgical simulators can be leveraged to distinguish procedural dexterity and competency. This is particularly attractive given that simulated scenarios are readily reproducible and unbiased by anatomical and physiological variability present in clinical cases.

Perhaps the biggest challenge with use of virtual reality surgical simulators as objective assessment tools is demonstrating construct validity (the degree to which test items for a set of procedures identify the quality, ability, or trait it was designed to measure) and linking simulator outcome measures and parameters to clinical performance. Presently, few studies of existing virtual reality simulators for gastrointestinal endoscopy show construct validity.

- Degree of loop formation

- Total time with a loop

- Percentage of the mucosal surface examined or landmark visualization

- Time to task completion (ex. Time to pylorus for an upper endoscopy procedure)

- Efficiency of screening for pathology identification

- Time with a clear endoscopic view

- Excessive local pressure

- Patient discomfort or pain due to excessive air insufflation or pressure

- Time with patient discomfort or pain